TAG

Translating Affective Gesture

In collaboration with Caitlin Morris

Cognitive Enhancements at the MIT Media Lab with Pattie Maes

Abstract

We rely on our interpretation of others’ gestures and body language for many communication cues, perhaps even more than we rely on the content of verbal language. Differences in gestural dialects can create challenges in communication of intent and social decision making across socio-cultural divides. We are proposing a system for emotional intent translation for individuals’ physical gestures, enabling more fluid communication in social interactions and acting as a training system for development of empathetic mirroring. The TAG system aims to create greater empathy and reduce implicit bias between people of different social, geographical, and cultural contexts.

Author Keywords

HCI; social devices; gesture recognition; social mirroring; machine learning; wearable devices.

ACM Classification Keywords

Human-centered computing, Interaction design theory, concepts and paradigms, human-centered computing, Ubiquitous and mobile computing systems and tools

Motivation

Gesture can be divided into several categories, particularly intrinsic (innate gestures shown from birth, such as nodding as an affirmative) and extrinsic (learned via cultural cues). Within extrinsic gesture, behaviors have been shown to be conditioned strongly by socio-psychological factors, leading to cultural differences in affective gestural expression between unique groups[1][2]. These interpersonal differences also inhibit the function of mirror neurons, making it more difficult to find empathetic understanding with people of different backgrounds. Mirror neurons, which trigger when we see a motion-based behavior that we can recognize and mimic, enable us to feel a biological understanding of others’ emotions, intent, and mental state [3]. Mirroring behavior has been linked to language development, learning, and social decision making [4].

Many previous studies have looked at the relationship between gesture and emotion. A majority of these studies acknowledge the differences in affective expression from individual to individual, especially between different cultures, and most include an individual-level calibration phrase in their experimental design [5][6]. While these studies have made progress in determining the importance of gesture in affective communication, and shown the success of determining affective state from only gesture as interpreted by both humans and machine learning systems, they end at the analysis and do not have any methods for using this information to improve real-world interactions. One study, the SuperPower Glass [7], provides real-time feedback interpreting facial expressions for children with autism. While this is very effective for individuals with autism spectrum disorder, TAG is designed to work more generally with intercultural differences that may inhibit professional and personal interactions and biases.

Background

The research background for the TAG system covers several categories: the role of neural mirroring in empathy and social decision making, the importance of cross-cultural translation in reducing implicit bias, the importance of gesture in conveying emotion, and the effectiveness of machine learning systems in detecting emotion based on gesture.

We have previously discussed the importance of mirror neurons in improving understanding between individuals. Bedder et al. reference this research in “A mechanistic account of bodily resonance and implicit bias” [8], in which they emphasize the importance of bodily resonance - perceiving someone as “other” versus identifying them as similar to oneself - in reducing implicit bias, which is critical in shaping our attitudes toward other people. We hypothesize that the TAG system can help with increasing bodily resonance by providing natural cues around the gestures and affective expressions of others.

We have looked into a variety of research approaches to identifying emotional intent through gesture with machine learning. Emotion Analysis in Man-Machine Interaction Systems (Balomenos et al. [9]) uses a camera-based hand tracking system with Hidden Markov Models (HMMs) for time-series gesture classification. The study emphasizes the importance of individual gesture dialect training: “A mutual misclassification… is mainly due to the variations across different individuals. Thus, training the HMM classifier on a personalized basis is anticipated to improve the discrimination between these two classes.”

Balomenos et al. also emphasize the importance of motion profiles rather than fixed positioning in gesture, finding different affective assignments based on gestural speed and character. This led us to include IMU in our design, in tandem with EMG to achieve both fixed gestural postures and motion characteristics.

Kapur et al. found that accurate affective identification through gesture was “...achieved by looking at the dynamics and statistics of the motion parameters, which is what we use for features in the automatic system” [10] In this study, a motion capture system abstracting gesture into 14 points across the body yielded 93% accuracy in affective interpretation by human viewers, and an 84% to 92% accuracy from a machine learning system (SMO support vector machine). These prior works support the concept that gesture classification is a valid means of interpreting emotional intent, and reinforce the need for individually-trained classifications based on the difference in gestural communication between individuals.

User Experience

This system is designed for two primary phases, a training phase and a real-time use phase. As the system is dependent on accurate gesture-emotion calibration for each individual, both the training stage and emotional classification are important to experimental testing and real-world use conditions.

We have looked into several prior experiments for methods development, including Kapur et al.: Gesture-Based Affective Computing on Motion Capture Data, which determined that “although arguably in acting out these emotions the subject’s cognitive processes might be different than the emotion depicted, it turns out that the data is consistently perceived correctly even when abstracted [in the real-world scenario]”. We have based our training methods on this previous research.

In the training phase, the user is asked to act out a specific emotional scenario based on a prompt card while wearing the TAG device. Gesture subsections are extracted based on emotion-specific timestamps, and used to feed the machine learning classification software. The user is asked to self-identify the emotions they were expressing at each timestamp to confirm accurate emotional assignment.

Additionally, the mapping of colors (displayed on the device LEDs) to emotions needs to be calibrated for each individual. The user will be shown a standard color wheel and given the base set of six universal emotions (anger, fear, disgust, happiness, sadness, surprise). The user will assign their color associations to each emotion, which becomes the user’s affective color mapping. This information is communicated between users in a real-world interaction, so that in a conversation between Person A and Person B, in which Person A is gesturing, the color mappings displayed on A’s device will be calibrated to B’s settings, enhancing B’s understanding of A’s gestural emotional intent.

Design and Implementation

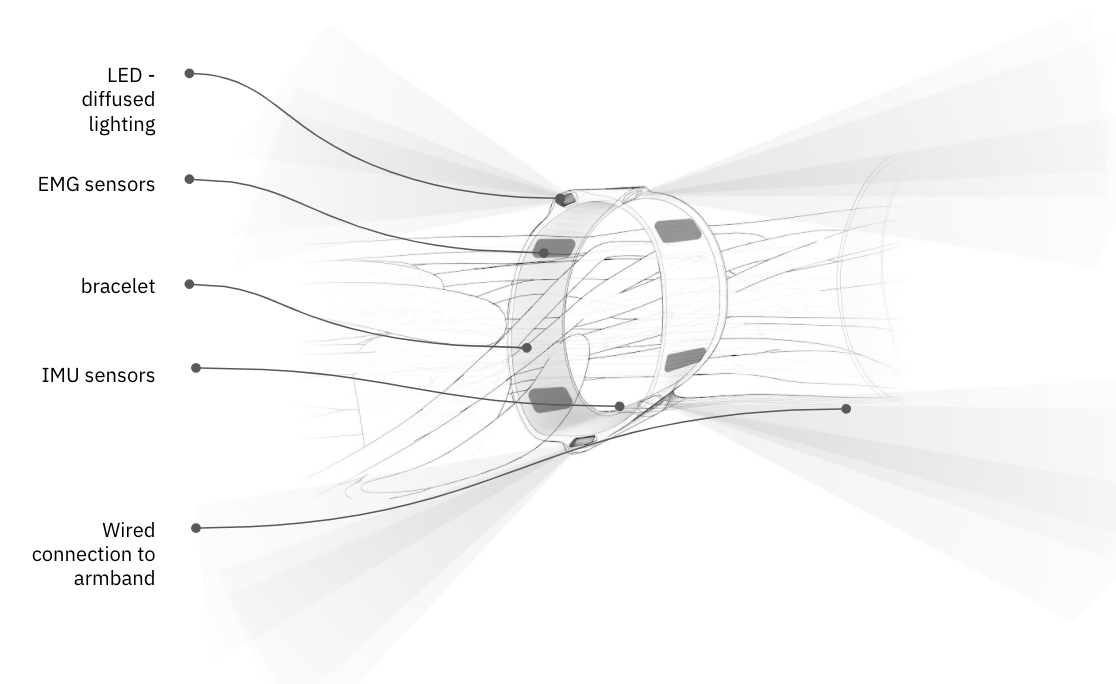

The prototype device hardware is built around a Myo armband, accessing 8 channels of raw EMG

(electromyography) data and 3 channels of IMU

(inertial measurement unit) data. The wearable device includes a custom armband which encloses the armband, attached via a lightweight wire harness to a wrist-mounted LED display, custom designed to cast the light from the LEDs out onto the skin. The device communicates wirelessly over Bluetooth protocol to the control computer. Future versions would use a custom array of EMG electrodes, mounted in a thin-profile device worn on the forearm, and a more portable computer.

The 11 channels of gesture data (combined EMG and IMU) are received to a computer over Bluetooth and are filtered and condensed using a custom app written in C++, using the openFrameworks toolkit. This software sends the combined data over OSC (Open Sound Control) to Wekinator, an open source software for machine learning. In the training scenario, Wekinator is used to train repeated similar gestures to an associated output. In the live scenario, the software is used for real-time classification of the data, resulting in a single-channel output which places each unique gesture classification at a point along an affective spectrum. This single-channel output is sent over OSC to a custom application written in the Processing application, which translates the classified output to a gradient of color information, and transmits that information to a microcontroller for live updating of the wrist-worn LED display.

Related Work

Other projects have tried to carve away at the main hypothesis of a gestural connection to emotion. In a paper titled “A speaker’s gesture style can affect language comprehension”, the author suggests that the personal communication style of speakers has a significant impact on language comprehension especially when integrating gesture and speech

(Obermeier et al. [10]). Another project titled

“Translating Affective Touch into Text” presents an experience where haptic inputs are translated into text by mapping emotional profiles of tactile features

(Shapiro et al. [11]). There is a clear emergent trend in research related to translation of affective emotional cues beyond facial and lingual inputs.

Future Work

With the TAG system introducing the possibility of affective gestural translation, speculations for future applications open to an array of possibilities. First and foremost, this technology would increase emotional mobility across geographies and cultures, lessening the friction in communication between parties of different backgrounds. Furthermore, users can develop highly personalized emotive profiles that are nested within other social scales of gestural norms. This would be pivotal in how individuals develop and retain idiosyncratic schema of communication. Also, there is the potential for increased self-awareness around body language, and further entraining of proprioceptive-emotional cues. Lastly, there further exploration needed in how TAG may enhance virtual communication as technology moves towards a synthesized future, allowing for multimodal, low-bandwidth transfer of emotional data.

References

1. Noroozi et al. Survey on Emotional Body Gesture Recognition https://arxiv.org/pdf/1801.07481.pdf

2. Efron, D. Gesture and Environment. https://psycnet.apa.org/record/1942-00254-000

3. “Mirror neurons: Enigma of the metaphysical modular brain” https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3510904/

4. "Autism Linked To Mirror Neuron Dysfunction." ScienceDaily. ScienceDaily, 18 April 2005. www.sciencedaily.com/releases/2005/04/050411204511.htm

5. Kapur et al., Gesture-Based Affective Computing on Motion Capture Data

6. Balomenos et al. https://link.springer.com/content/pdf/10.1007%2F978-3-540-30568-2_27.pdf

7. http://autismglass.stanford.edu/

8. Bedder, Bush, et al. A mechanistic account of bodily resonance and implicit bias. https://doi.org/ 10.1016/j.cognition.2018.11.010

9. Balomenos et al. https://link.springer.com/content/pdf/10.1007%2F978-3-540-30568-2_27.pdf

10. Kapur et al. https://link.springer.com/content/pdf/ 10.1007%2F11573548_1.pdf

11. Christian Obermeier, Spencer D. Kelly, Thomas C. Gunter, A speaker’s gesture style can affect language comprehension: ERP evidence from gesture-speech integration, Social Cognitive and Affective Neuroscience, Volume 10, Issue 9, September 2015, Pages 1236–1243, https://doi.org/10.1093/scan/nsv011

12. Daniel Shapiro, Zeping Zhan, Peter Cottrell, and Katherine Isbister. 2019. Translating Affective Touch into Text. In Extended Abstracts of the 2019 CHI Conference on Human Factors in Computing Systems (CHI EA '19). ACM, New York, NY, USA, Paper LBW0175, 6 pages. DOI: https://doi.org/ 10.1145/3290607.3313015

Gesture mapping for enhancement of empathy-building mirroring behavior in cross-cultural communication

Figure 1: The wearable device, showing EMG and IMU sensors and embedded LEDs.